|

Weather Eye with |

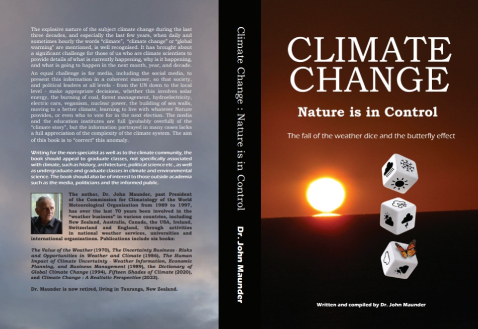

My new book from Amazon -Extract from a few pages from Chapter 1:

Damaging weather events inevitably lead to climate evangelists making apocalyptic claims of imminent disaster.

UN Secretary General António Guterres led the most recent chorus in 2024, in talking about “global boiling” and raising alarmism to a fever pitch. Yet, more than 1,600 scientists, including two Nobel physics laureates, have signed a declaration stating that, “There is no climate emergency.”

That poses a serious political problem for any government that has been arguing the contrary.

An expert opinion, submitted pro bono in November 2023 to the Hague Court of Appeals by three eminent American scientists, presented a devastating refutation of climate catastrophism.

Their conclusions contradict alarmists’ sacred beliefs, including that anthropogenic CO2 will cause dangerous climate change, thus obliterating the desirability, let alone the need, for net-zero policies that by 2050 would inflict US$275 trillion in useless expenditures on wealthy countries and harm the poorest people in the world’s poorest economies.

Predictably, the study has been ignored by mainstream media.

The foundation for the three scientists’ opinion is, not surprisingly, the scientific method, which Richard Feynman (1918-88), theoretical physicist and 1965 Nobelist, defined with trademark clarity: “It doesn’t matter how beautiful your theory is, it doesn’t matter how smart you are. If it doesn’t agree with experiment, it’s wrong.”

Joe Oliver writing in the Canadian Financial Post says on 2 July 2024 “To be reliable, science must be based on observations consistent with predictions, rather than consensus, peer reviews, opinions of government-controlled bodies like the IPCC and definitely not cherry-picked, exaggerated or falsified data. The paper makes the point colloquially: “Peer review of the climate literature is a joke. It is pal review.”

Misrepresentation Of Climate

Science is described by William Kininmonth (in a study published in News Weekly August 19, 2024) as the systematic study of the structure and behaviour of the physical and natural world and is based on observation and experiment. That is, any hypothesis must be derived from sound representation of climate science. The UN’s Intergovernmental Panel on Climate Change (IPCC) uses global and annual average temperature as an index for describing climate change.

However, this index is misleading because it hides and does not account for the regional and seasonal differences in the rates of warming that have been observed. The human-caused global warming hypothesis, to be valid, must also explain these regional and seasonal variations.

Since 1979, based on conventional and satellite observations, observations have been systematically analysed as the basis for global weather forecasting. The analyses are stored in a database (NCEP/NCAR R1) maintained by the US National Center for Environmental Prediction (NCEP).

A satellite derived database of atmospheric temperature is held by the University of Alabama, Huntsville (UAH). The observations show that the index of global and annual rate of Earth’s warming of 1.7°C per century does not capture the differing regional and seasonal characteristics. Any explanation for the recent warming must be able to explain why these regional and seasonal differences occur. Kininmonth notes that CO2 is described as a well-mixed greenhouse gas in the atmosphere and its concentration is measured in parts per million (ppm).

CO2 is constantly flowing between the atmosphere, the biosphere, and the oceans. The annual average concentration has little regional variation but there is a marked seasonal cycle with the range of the cycle being a maximum over the Arctic. CO2 is taken from the atmosphere, primarily by way of two processes: by photosynthesis with growing terrestrial plants, and by absorption into the colder oceans. CO2 enters the atmosphere by way of decaying terrestrial plant material, from outgassing from warmer oceans, and through emissions associated with the industrial and lifestyle activities of humans.

Because of the large natural flows, the average residence time in the atmosphere of a CO2 molecule is only about four years. The emissions generated by human activities have grown to about 10% of the natural flows.

Despite the seasonal atmospheric CO2 concentration peaking over the Arctic, far from human settlement, it is claimed that the rising atmospheric concentration is caused by human activity. Regardless What controls the weather dice? 3 forcing scheme that is the basis for including CO2 in computer modelling of the origins, CO2 concentration is increasing in the atmosphere.

As summarised by IPCC, the increasing concentration of CO2 reduces longwave radiation emissions to space, thus upsetting Earth’s long-term radiation balance. The slight reduction in flow of radiation energy to space, called ‘radiation forcing’, is claimed to be a source of heat that warms the Earth’s atmosphere. The IPCC recognises climate forcing as ‘a modelling concept’ that constitutes a simple but important means of estimating the relative surface temperature impacts due to different natural and anthropogenic radiative causes.

A fundamental flaw in this modelling concept is an assumption that, prior to industrialisation, Earth was in radiation balance. First, nowhere on Earth is there radiation balance: over the tropics absorption of solar radiation exceeds emission of longwave radiation to space; over middle and high latitudes emission of longwave radiation to space exceeds absorption of solar radiation. Earth is only in near radiation balance because heat is transported by winds and ocean currents from the tropics to middle and high latitudes, and second, the latitude focus for absorption of solar radiation varies with seasons.

There is a need for the ocean and atmosphere transport to vary with the seasonal shift. Consequently, the seasonally changing solar radiation causes Earth’s global average near surface (2 metre) air temperature to oscillate with an annual range of about 3°C.

Rather than being in balance, as claimed by the IPCC, the net radiation exchange with space oscillates about a balance point. The reason for the oscillation is the differing fractions of landmass in each of the hemispheres.

The Northern Hemisphere has a higher proportion of land while the Southern Hemisphere has much more ocean surface than land surface. Because the radiation exchange with space is not in balance then the essential requirement of radiation forcing, as used in climate modelling, is invalid. Moreover, given the strong natural flows of heat within the climate system (oceans, atmosphere, and ice sheets) there is no reason to expect that, as CO2 concentration increases, the small reduction in longwave energy to space will heat the atmosphere.

Kininmonth in his conclusion says that the hypothesis and computer modelling that suggest human activities and the increase in atmospheric CO2 are the cause of recent warming cannot be sustained. He says that there never was a balance between absorbed solar radiation and longwave radiation emitted to space. Earth’s radiation to space changes with the seasonally varying temperatures of the surface and atmosphere; this is according to well understood physics. The radiation Climate Change: Nature is in Control leads to false outcomes.

The index of global and annual average temperature used by the IPCC is crude and hides the important regional and seasonal differences in the rate of warming as experienced over the recent 44 years. It is these regional and seasonal differences, together with well understood meteorological science, that point to slow variations of the ocean circulations being the cause of recent warming.

Kininmonth concludes that the recent warming is consistent with natural cyclical variations of the climate system. Attempts to halt the current warming by reducing atmospheric CO2 concentrations will certainly fail. Government policies that are based on the alarming, but erroneous IPCC temperature projections are fraught.

*********************

May the odds be ever in your favour

Climate tech companies can tell you the odds that a flood or wildfire will ravage your home. But what if their odds are all different? Bloomberg.com in a report dated August 2024 suggests that “May the odds be ever in your favour.”

Bloomberg further comments that humans have tried to predict the weather for as long as there have been floods and droughts; but in recent years, climate science, advanced computing and satellite imagery have supercharged their ability to do so. Computer models can now gauge the likelihood of fire, flooding, or other perils at the scale of a single building lot and looking decades into the future. Startups that develop these models have proliferated.

The models are already guiding the decisions of companies across the global economy. Hoping to climate-proof their assets, government-sponsored mortgage behemoth Fannie Mae, insurance broker Aon, major insurers such as Allstate and Zurich Insurance Group, large banks, consulting firms, real estate companies and public agencies have flocked to modellers for help.

Two ratings giants, Moody’s, and S&P, brought risk modelling expertise in-house through acquisitions. They further suggest that: “There’s no doubt that this future-facing information is badly needed. The Federal Emergency Management Agency — often criticized for the inadequacy of its own flood maps —will now require local governments to assess future flood risk if they want money to build back after a disaster.”

But, according to Bloomberg there’s a big catch. Most private risk modellers closely guard their intellectual property, which means their models are essentially black boxes. A White House scientific advisers’ report warned that climate risk predictions were sometimes “of questionable quality.” Research non-profit CarbonPlan puts it more starkly: Decisions informed by models that can’t be inspected “are likely to affect billions of lives and cost trillions of dollars.”

Everyone on the planet is exposed to climate risk, and modelling is an indispensable tool for understanding the biggest consequences of rising temperatures in the decades ahead. However zooming in closer with forecasting models, as the policy makers and insurers adopting these tools are doing, could leave local communities vulnerable to unreliable, opaque data. As black-box models become the norm across, for example, housing and insurance, there’s real danger that decisions made with these tools can harm people with fewer resources or less ability to afford higher” costs.

One telltale sign of this uncertainty can be seen in the startlingly different outcomes from a pair of models designed to measure the same climate risk. Bloomberg Green (2024) compared two different models showing areas in California’s Los Angeles County that are vulnerable to flooding in a once-in-a-century flood event. The analysis considers only current flood risk. Still, the models match just 21 per cent of the time.

Model inputs — the metrics fed into a black box and how they’re weighted — obviously affect the outputs. One of the above models was released in 2020 by First Street Technology, and accounts for coastal flooding caused by waves and storm surge. (If the American public has any familiarity with climate risk modelling, it’s thanks to First Street, whose risk scores have been integrated into millions of online real-estate listings.)

The other, newer model was created by a team led by researchers at the University of California at Irvine. It focuses on rain-induced and riverine flooding and incorporates high-resolution ground-elevation data alongside granular information on local drainage infrastructure.

To fill out the picture, Bloomberg Green reviewed a third model, created by the property information firm CoreLogic. Its data is used by many banks and insurers, and by the federal National Flood Insurance Program to help set rates for some 5 million US homeowners. When it comes to Los Angeles County properties at high or extreme risk, CoreLogic’s model agrees with First Street’s or Irvine’s less than 50% of the time.

Much of this variation may come down to modellers’ different sets of expertise — climate science, building codes, insurance, engineering, hydrology — and how they’re applied. But these data disagreements can have real-world consequences. Depending on which models a government office or insurer considers, it could mean building protective drainage in a relatively safe area or raising premiums in the wrong neighbourhood.

And while experts are well versed in modelling methods, that’s not the case for all users of such tools, including homebuyers. First Street’s forecasts — the company says it can predict the risks of flooding, fire, extreme heat and high winds on an individual-property level — are translated into risk scores for US addresses that anyone can check at riskfactor.com. Redfin and realtor.com include these scores in home listings.

My new book is available from Amazon.com